Predictive Maintenance

This post utilizes a publicly available dataset, which can be accessed at DOI.

Below are the first two rows of the dataset, which consists of 227,383 rows. We have five feature columns and five target columns. The feature columns represent system quantities, and the target columns contain respective binary values indicating whether a feature is outside of a previously defined range.

| UDI | Product ID | Type | Air temperature [K] | Process temperature [K] | Rotational speed [rpm] | Torque [Nm] | Tool wear [min] | Machine failure | TWF | HDF | PWF | OSF | RNF |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | M14860 | M | 298.1 | 308.6 | 1551 | 42.8 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 2 | L47181 | L | 298.2 | 308.7 | 1408 | 46.3 | 3 | 0 | 0 | 0 | 0 | 0 | 0 |

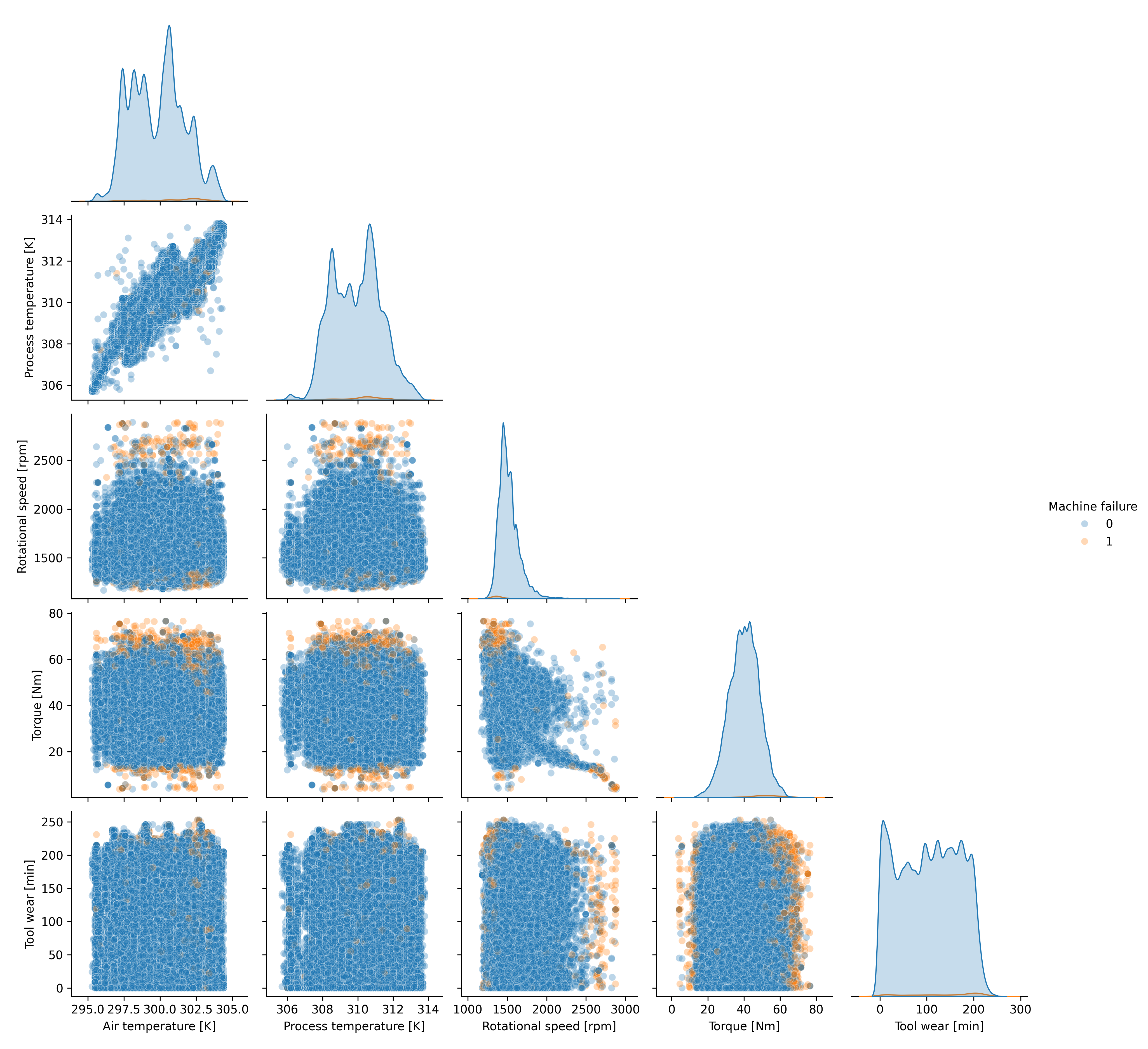

The “Machine Failure” column is set to one if one or more targets are true. We can visualize the machines that failed in a correlation plot.

We can observe that the target properties are defined by values outside of a given range. Our goal is to build a model that classifies machines as failures, trained on the data provided here. Let’s examine the distribution of the targets:

| Machine failure | TWF | HDF | PWF | OSF | RNF |

|---|---|---|---|---|---|

| 0 | 9954 | 9885 | 9905 | 9902 | 9981 |

| 1 | 46 | 115 | 95 | 98 | 19 |

The targets are scarcely met, especially for RNF. We can expect the model to perform best for HDF, OSF, PWF, then TWF, and lastly RNF.

The problem is a classical classification problem, which we can attempt to solve using a Random Forest Classifier. We start by splitting the dataset into training and test sets:

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

Next, we define the model with a multi-output classifier. The random forest classifier, by default, categorizes based on a single target column. However, since we have five target columns, this is a multi-output problem.

base_model = RandomForestClassifier(random_state=42)

model = MultiOutputClassifier(base_model, n_jobs=-1)

model.fit(X_train, y_train)

Finally, we report the classification performance:

y_pred = model.predict(X_test)

report = classification_report(y_test, y_pred, target_names=targets)

| precision | recall | f1-score | support | |

|---|---|---|---|---|

| TWF | 0.00 | 0.00 | 0.00 | 11 |

| HDF | 1.00 | 0.76 | 0.87 | 17 |

| PWF | 0.94 | 0.75 | 0.83 | 20 |

| OSF | 0.90 | 0.50 | 0.64 | 18 |

| RNF | 0.00 | 0.00 | 0.00 | 6 |

As expected, the precision is lowest for TWF and RNF. The other three targets are identified with high precision. The mean absolute error is not a good estimator due to the uneven distribution of unique values. We can learn from this result that the dataset is not large enough to cover five targets. But how does the same model look like if we only want to detect if a product fails. For this we use the ‘Machine failure’ column as target and train again a Random Forest Classifier. We get the following classification report:

| precision | recall | f1-score | support | |

|---|---|---|---|---|

| 0 | 1.00 | 1.00 | 1.00 | 44817 |

| 1 | 1.00 | 1.00 | 1.00 | 660 |

This model labels all products in the test set correctly. The accuracy is also one.